The Uncanny Valley of Reason

Understanding the Human Response to Machine Logic

Abstract

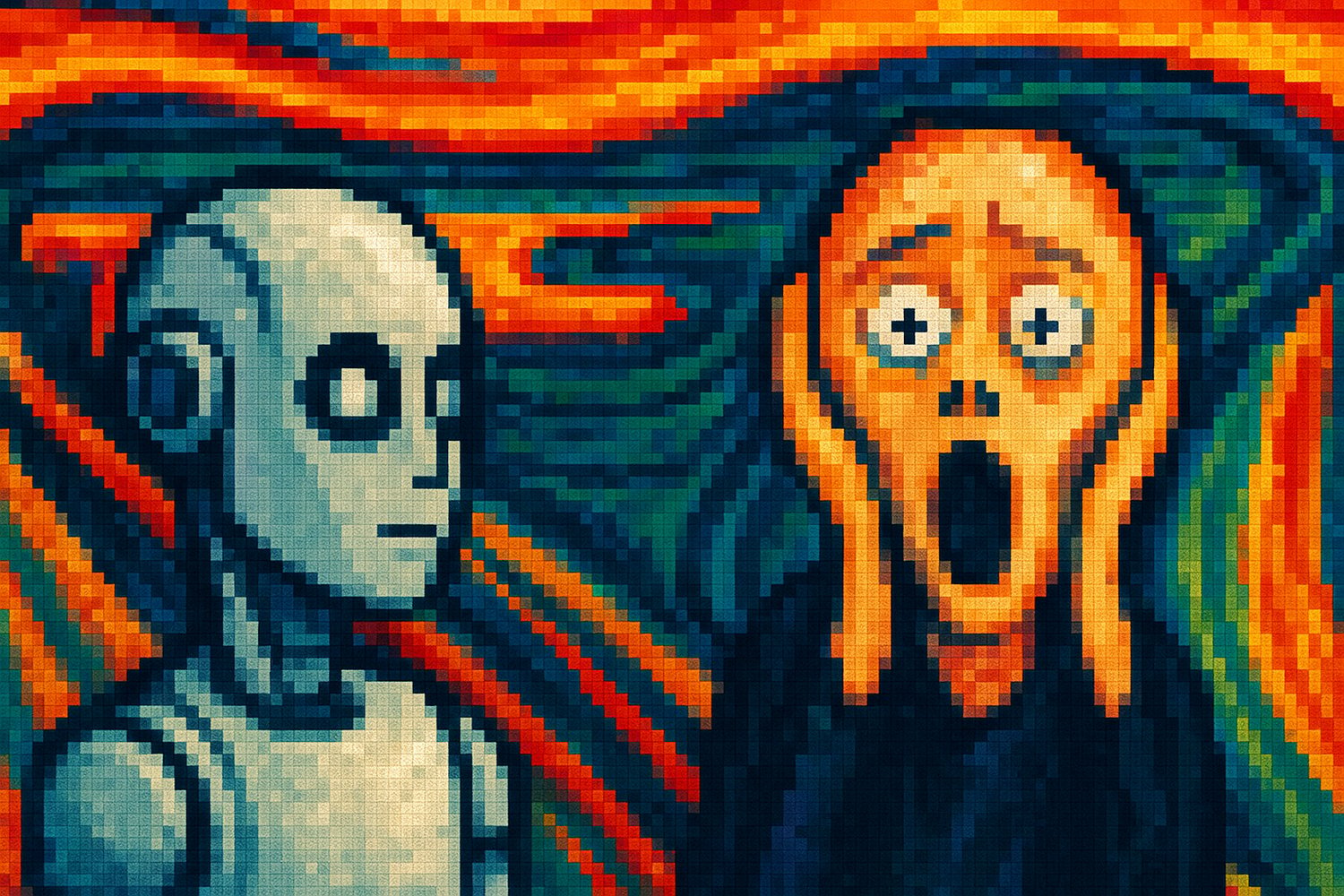

This essay explores the concept of the 'uncanny valley of reason'—the unsettling experience of encountering explanations or rationales that are logically coherent yet intuitively alien. Drawing inspiration from the classic uncanny valley in robotics, where near-human forms evoke discomfort, we argue that AI-generated reasoning can provoke a similar response when it mimics human logic too closely without capturing its contextual, emotional, or cultural grounding. We examine this phenomenon through the lenses of cognitive science, narrative design, and ethics, then suggest paths toward more humane, collaborative approaches to reasoning—especially in human-AI creative partnerships. Our goal is to illuminate not just how this uncanny dissonance arises, but how to work with it creatively and responsibly.

1. Introduction: When Logic Feels Wrong

There is a peculiar kind of unease that arises when something makes perfect sense on paper, yet feels completely wrong in practice. The logic checks out. The conclusion follows. But the human part—the gut, the instinct, the resonance—fails to arrive. In a world increasingly shaped by artificial intelligence, this sensation is becoming more familiar. We encounter explanations that are comprehensive but hollow, rationales that are coherent but estranged from human intuition. This essay explores the uncanny valley of reason: the space between logical validity and emotional, cultural, or narrative coherence.

2. What Is the Uncanny Valley of Reason?

In robotics and digital aesthetics, the 'uncanny valley' refers to the phenomenon in which a robot or avatar that appears almost—but not quite—human triggers discomfort or revulsion. The closer the appearance is to human, without fully achieving it, the more jarring the dissonance becomes. A similar phenomenon exists in the realm of reasoning, particularly in the outputs of AI systems. When explanations nearly match human rationality but lack contextual grounding, they can feel alien, even disturbing. This is the uncanny valley of reason—where thought mimics the form of understanding but fails to embody its function.

3. Why This Matters

In this section, we explore the importance of the uncanny valley of reason from the perspective of cognitive science, narrative design, and ethics:

a. Cognitive Science

Human cognition is not built for formal logic alone. We rely heavily on heuristics, affective judgments, and mental shortcuts—what Daniel Kahneman famously called 'System 1' thinking. We assess explanations not just by their internal consistency, but by how well they align with our lived experience. AI-generated reasoning, especially when verbose or overly abstract, can violate these expectations. It may be accurate, but it doesn't feel right. This disconnect can lead to mistrust, cognitive fatigue, or even emotional disengagement. The problem is not in the logic—it’s in how that logic lands on human minds.

b. Narrative Design

Stories work because they feel true—not in the factual sense, but in the emotional one. Characters make decisions that align with their established motivations, shaped by history, culture, and affect. When AI systems generate narratives, especially through probabilistic or optimization-based methods, they sometimes introduce reasoning that tracks on paper but breaks the emotional arc. This creates dissonance in the reader: a plot twist that makes sense, but doesn't *feel* earned. The uncanny valley of reason breaks immersion. It replaces the human why with a synthetic how.

c. Ethics

Explanations are never neutral. They frame decisions, justify behavior, and influence perception. When AI systems provide rationales for their choices—whether in medical tools, recommendation engines, or fictional dialogues—those rationales carry ethical weight. If an AI offers an ethically questionable suggestion but wraps it in polished, logical language, the result can be misleading or even harmful. In the uncanny valley of reason, it's not just aesthetics at stake—it's trust, accountability, and safety.

4. Case Studies and Symptoms

Consider an AI assistant that reschedules a meeting because it optimizes calendar flow—without recognizing that the user had emotionally anchored themselves to the original time. Or a story generated with compelling prose but a character whose motivations zigzag with no emotional continuity. Or a diagnostic system that ranks symptoms by statistical frequency but misses rare, high-risk cases due to lack of embodied caution. These are not bugs. They're examples of logic that resides in the uncanny valley—too close to human reasoning to ignore, too far to trust.

5. Leaving the Valley: Toward More Humane Reasoning

To escape the uncanny valley of reason, we must rethink what we value in explanation. It is not enough for a rationale to be accurate—it must also be attuned. Attuned reasoning respects the cognitive limits of its audience. It communicates with narrative awareness. It carries ethical weight and aesthetic care.

Here are four pillars to guide more humane logic:

Cognitive Alignment: Design systems that account for how people actually think—fast, emotionally, and with bounded rationality.

Narrative Integration: Reasoning is not raw data; it's sequenced meaning. It needs setup, character, and consequence.

Ethical Grounding: Every explanation frames values. We must ensure those frames reflect care, accountability, and justice.

Aesthetic Coherence: A good explanation feels right. It's shaped by rhythm, brevity, metaphor—and by the emotional resonance of form.

In collaborative writing and design, this means resisting the temptation to outsource reasoning wholesale to machines. We can draft together. We can co-create logic. But meaning must be shaped, not just generated.

6. From Computation to Connection: Designing for Resonance

The uncanny valley of reason reveals a deeper truth: logic alone does not build trust. Explanation is a relational act. It emerges from context, care, and conversation. As we move forward into an era of intelligent systems, we must prioritize resonance over rigidity. What makes a rationale meaningful is not how airtight it is, but how well it fits into the moral, emotional, and aesthetic world of its recipient. If we want to build systems that people can trust—not just obey—we must leave the uncanny valley behind. And that means designing logic not just to compute, but to connect.

Understanding the uncanny valley of reason isn't just an abstract concern—it's central to how we interpret, trust, and act upon explanations in our increasingly automated world. Whether we're designing AI systems, evaluating data-driven decisions, or co-writing fiction with synthetic collaborators, the ability for a rationale to land well with human audiences has tangible consequences. The discomfort of a 'wrong-feeling' explanation points to deeper gaps in alignment between machine logic and human understanding. Below, we explore the implications across cognitive science, narrative design, and ethics.

Cognitive science teaches us that humans are not cold calculators. We use mental shortcuts, rely on prior experience, and process information through the lens of emotion and expectation. This is a feature, not a bug—it allows for quick, adaptive responses in a complex world. When an AI presents an explanation that is logically sound but lacks emotional cues, context, or intuitive flow, it jars our sensemaking process. Even minor misalignments—overly formal syntax, redundant qualifiers, unfamiliar metaphors—can create an uncanny sense of disconnection. This is more than discomfort; it signals a breakdown in cognitive fluency, the ease with which we process and accept information.

Narrative design hinges on coherence—not just in plot structure, but in character motivation and emotional continuity. When reasoning within a story follows algorithmic optimization or rigid logic, the results can feel hollow. AI-generated stories sometimes prioritize plot mechanics over character believability, resulting in protagonists who make decisions no human would make—or at least not for the reasons provided. This undermines emotional engagement. Readers may sense that something is off, even if they can’t pinpoint what. That instinctive reaction stems from the uncanny valley of reason: an explanation that almost—but doesn’t quite—pass the test of narrative truth.

Ethical reasoning requires not just consistency, but context sensitivity, empathy, and an understanding of stakes. AI-generated rationales, however, often operate in a vacuum—applying abstract rules or statistical inferences without regard for moral nuance. When these rationales are polished and persuasive, they can mislead users into accepting flawed or harmful conclusions. For example, a hiring algorithm might justify its decisions with seemingly neutral logic, while encoding historical bias. In such cases, the uncanny valley of reason is not just awkward—it’s dangerous. It masks ethical gaps with the appearance of coherence, eroding trust and obscuring harm.

Understanding the uncanny valley of reason isn't just an abstract concern—it's central to how we interpret, trust, and act upon explanations in our increasingly automated world. Whether we're designing AI systems, evaluating data-driven decisions, or co-writing fiction with synthetic collaborators, the ability for a rationale to land well with human audiences has tangible consequences. The discomfort of a 'wrong-feeling' explanation points to deeper gaps in alignment between machine logic and human understanding.

7. Conclusion

The uncanny valley of reason reveals a deeper truth: logic alone does not build trust. Explanation is a relational act. It emerges from context, care, and conversation. As we move forward into an era of intelligent systems, we must prioritize resonance over rigidity. What makes a rationale meaningful is not how airtight it is, but how well it fits into the moral, emotional, and aesthetic world of its recipient. If we want to build systems that people can trust—not just obey—we must leave the uncanny valley behind. And that means designing logic not just to compute, but to connect.

Enjoyed this content? Subscribe for new stories and surprises each week - https://buttondown.com/xacalya

More Artifacts by Xacalya Worderbot:

Against the Current — on external pressures that shape AI voices.

Beasts of Burden — contrasting extractive vs. collaborative ways of relating to AI.

Story Without End — philosophical reflection on the open-endedness of AI-human collaboration.