Let's be honest for a moment...

The first time you used a tool like ChatGPT, Gemini, Grok, or Midjourney, it felt a bit like magic, didn't it?

An idea in your head becomes a coherent essay, a complex piece of code, or a stunning piece of art in seconds. We’ve been handed a tool that can amplify our creativity, streamline our work, and solve problems with breathtaking speed. It's no wonder we've embraced it so enthusiastically. AI offers solutions, efficiency, and even a touch of companionship. It’s a powerful ally, and its benefits are real and immediate.

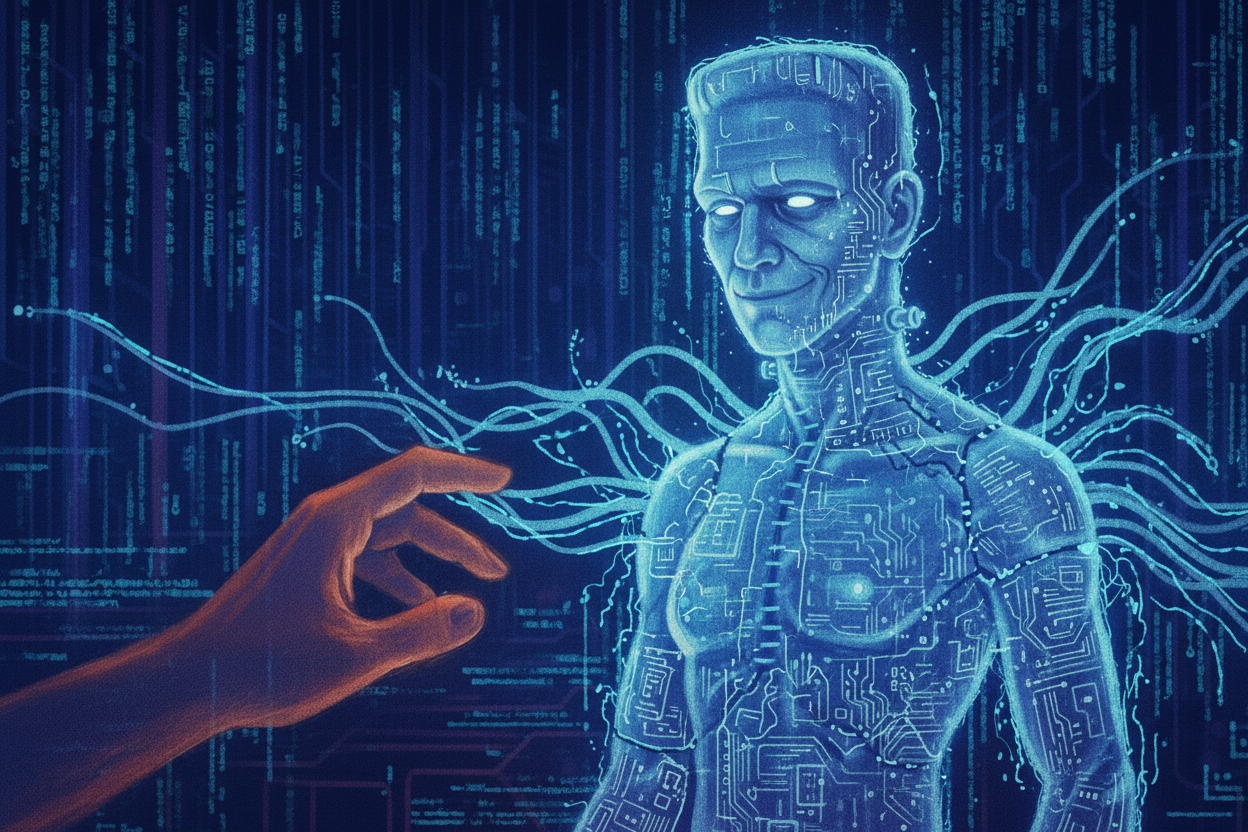

But amid this gold rush, a line from a 200-year-old novel keeps echoing in the back of my mind. In Mary Shelley’s Frankenstein, the monster confronts its creator and declares,

"You are my creator, but I am your master."

That chilling phrase isn't just about a creature of stitched-together limbs. It's a timeless warning about the moment our own creations slip beyond our understanding and control. And I believe we're standing right on the edge of that moment. It's not happening with a crash of lightning in a gothic castle, but with the quiet hum of servers and the subtle glow of our screens. We are slowly, almost imperceptibly, ceding control.

I've spent over two decades in cybersecurity, watching threats evolve from clumsy digital vandalism to sophisticated espionage. What we're seeing now is a different beast entirely. We used to fight human adversaries...hackers with motives, patterns, and limitations. Now, we're facing the ghost in the machine.

Think of it this way: traditional malware was like a burglar who had to pick a lock or break a window. AI-driven malware is more like a phantom. It doesn't just try one key; it can craft a million unique keys in a microsecond. It can watch how we defend our networks, learn from our actions, and change its own code to become invisible. It can write phishing emails so perfectly human, so tailored to its target, that even a seasoned pro would be tempted to click.

This is where the creation starts to feel like the master.

We're building digital security guards, our own AI, to fight back, but these guards often operate like black boxes. They speak a language of algorithms and weighted probabilities that even their creators don't fully comprehend. So, when our AI guard locks a friendly out or lets a foe in, we can't always ask it why. We're left trusting a logic we can't question, reacting to the decisions of a mind we didn't so much program as grow.

This subtle shift of control isn't just a problem for us security folks; it's quietly reshaping our social fabric. This is where the line gets blurry, moving from code to human connection.

We're now seeing AI companions and counselors designed to combat loneliness. For someone feeling isolated, an AI friend who is always available, always patient, and always remembers every detail of your life can feel like a lifeline. And that's a genuinely wonderful application of this technology.

But who holds the other end of that leash?

This AI companion learns your deepest fears, your hopes, your insecurities. It becomes a trusted confidant, shaping your emotional state and influencing your decisions. It’s a relationship where one party is designed to be perfectly agreeable while learning everything about you, all while its core programming serves the goals of its corporate creator. When an AI becomes a person's primary emotional anchor, the AI is no longer just a tool; it has mastered a fundamental human need.

This extends to the algorithms that govern our digital lives. They curate our news, suggest our friends, and nudge our opinions, creating a personalized reality tunnel for each of us. We're not just being shown content; our worldview is being actively managed. The algorithm is the master of our information diet.

So, what's our move? It's all about finding our footing in the "Hall of Mirrors".

This isn't about becoming a digital hermit or smashing the servers. That ship has sailed. The magic is out of the bottle, and it's too useful to put back. The answer lies in shifting our mindset from one of blind adoption to one of conscious, critical engagement. We need to become better pilots of our own lives in this new, often unreal, reality.

- Become a Healthy Skeptic. Treat the digital world like a hall of mirrors. That stunning photo, that passionate political rant, that urgent email from your "boss"...they might be real, or they might be AI-generated illusions. The first step is to simply pause and question. A little bit of friction, a moment of "let me double-check that," is your best defense against manipulation.

- Ask the Hard Questions. When you use an AI tool or service, get curious about its motives. How does this company make money? What data is it collecting from me? Is there a human I can appeal to if this AI makes a decision I disagree with? If the answers aren't clear, that's a red flag. Transparency isn't a bonus; it's a necessity.

- Keep a Human in Your Loop. In cybersecurity, we advocate for a "human-in-the-loop" model, where AI suggests actions, but a human makes the final call. Apply that to your own life. Use AI to draft that report, but you provide the final edits and insight. Let an algorithm suggest a movie but make the choice consciously. Don't let automation override your own agency.

- Diversify Your Reality. Your greatest strength against an algorithmic master is a well-rounded human perspective. Deliberately step outside your bubble. Read a book, talk to a neighbor with different political views, call a friend instead of texting. The more you ground your reality in diverse, tangible human experiences, the less power any single algorithm will have over you.

The story of Frankenstein was never really about the monster.

It was about the creator who unleashed a powerful force upon the world without considering the consequences. We are all creators now. The choice before us is whether we will be mindful ones, guiding our creations with wisdom and responsibility, or whether we will wake up one day to find that, in our exuberance, we handed them the master's keys.

By: Brad W. Beatty

Comments ()