AI Inference Chip Market: The Coming Industry Inflection Point

The artificial intelligence chip market is approaching a critical inflection point where industry focus will shift decisively from scaling training workloads to optimizing inference performance. This transition, expected to accelerate between 2025-2027, presents significant investment opportunities in specialized inference chip companies and challenges NVIDIA's (NVDA) current market dominance.

Key Investment Thesis:

- Inference workloads will represent 70-80% of AI compute by 2030, compared to current training-dominated spending

- Specialized inference-only chips demonstrate 5-10x performance advantages over general-purpose GPUs

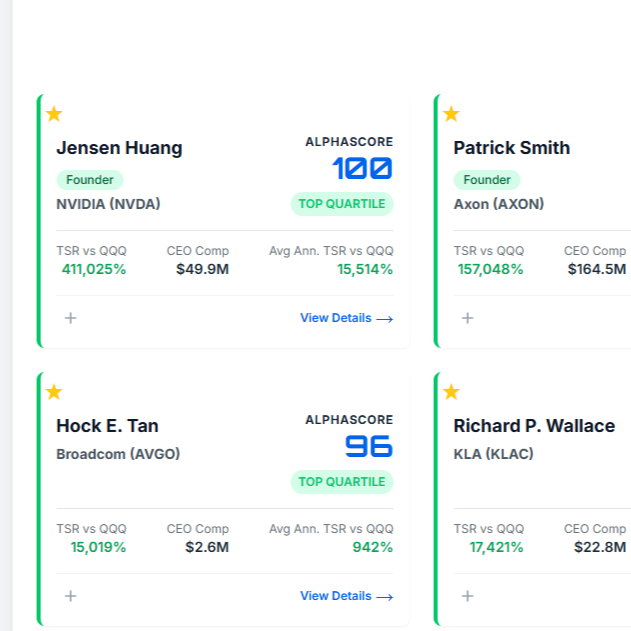

- Market fragmentation creates opportunities for nimble competitors to challenge NVIDIA's dominant position

- Economic incentives increasingly favor inference optimization over larger model development

While training has dominated computational spending historically, inference represents the true high-volume activity in generative AI applications. Steady state, we estimate that 80-90% of cloud spend in AI will be on inference, as inference costs are continuous after deployment while training occurs in distinct, intensive phases.