LLM Book 3 - The Transformer Model, Self-Attention, and BERT for Novices

Table of Contents

1. Introduction

1.1 What is Language Modeling?

1.2 Why is Language Modeling Important?

1.3 Challenges of Language Modeling

1.4 Overview of the Book

2. The Transformer Model

2.1 What is a Transformer?

2.2 How Does a Transformer Work?

2.3 Encoder and Decoder Blocks

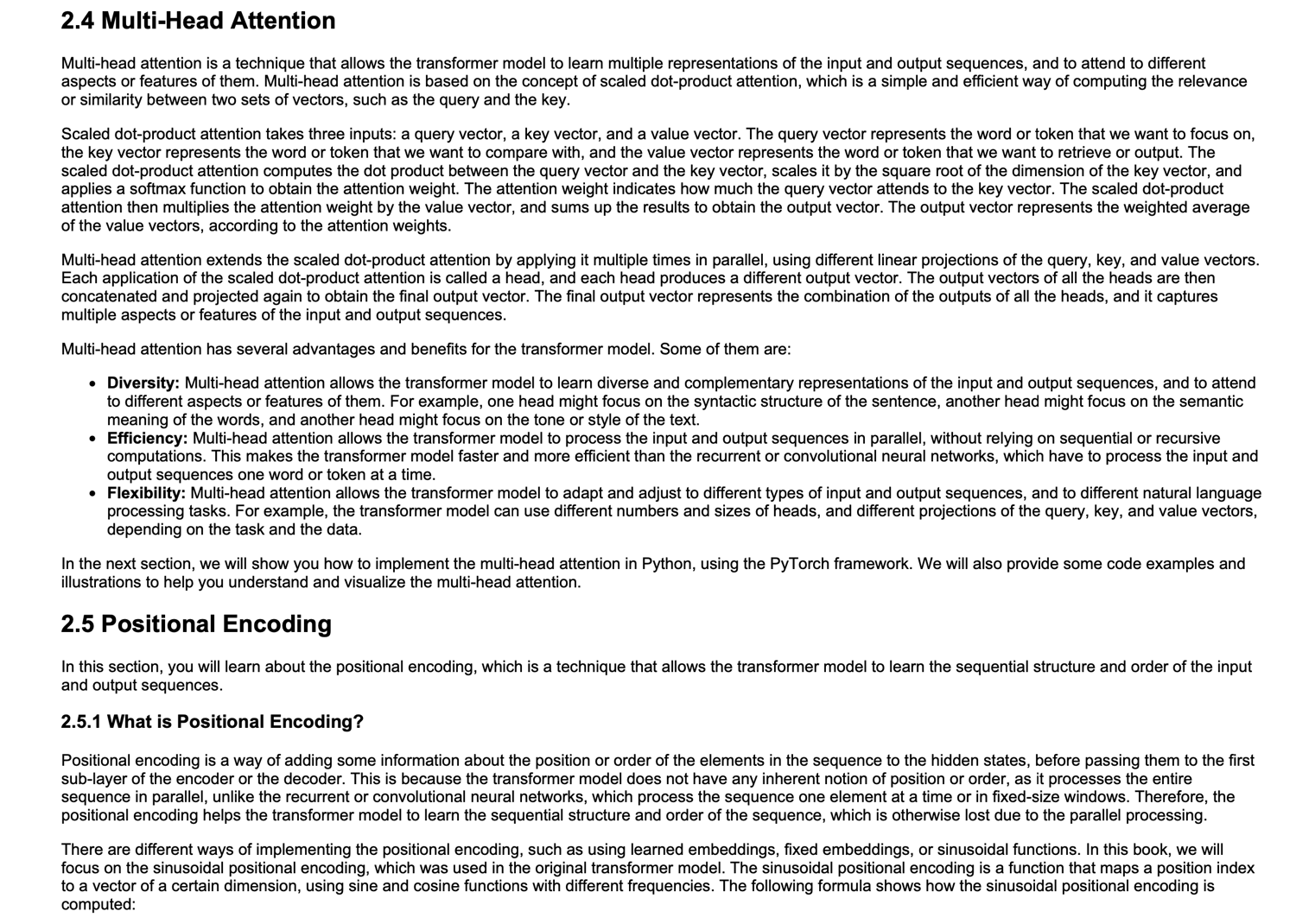

2.4 Multi-Head Attention

2.5 Positional Encoding

2.6 Feed-Forward Networks

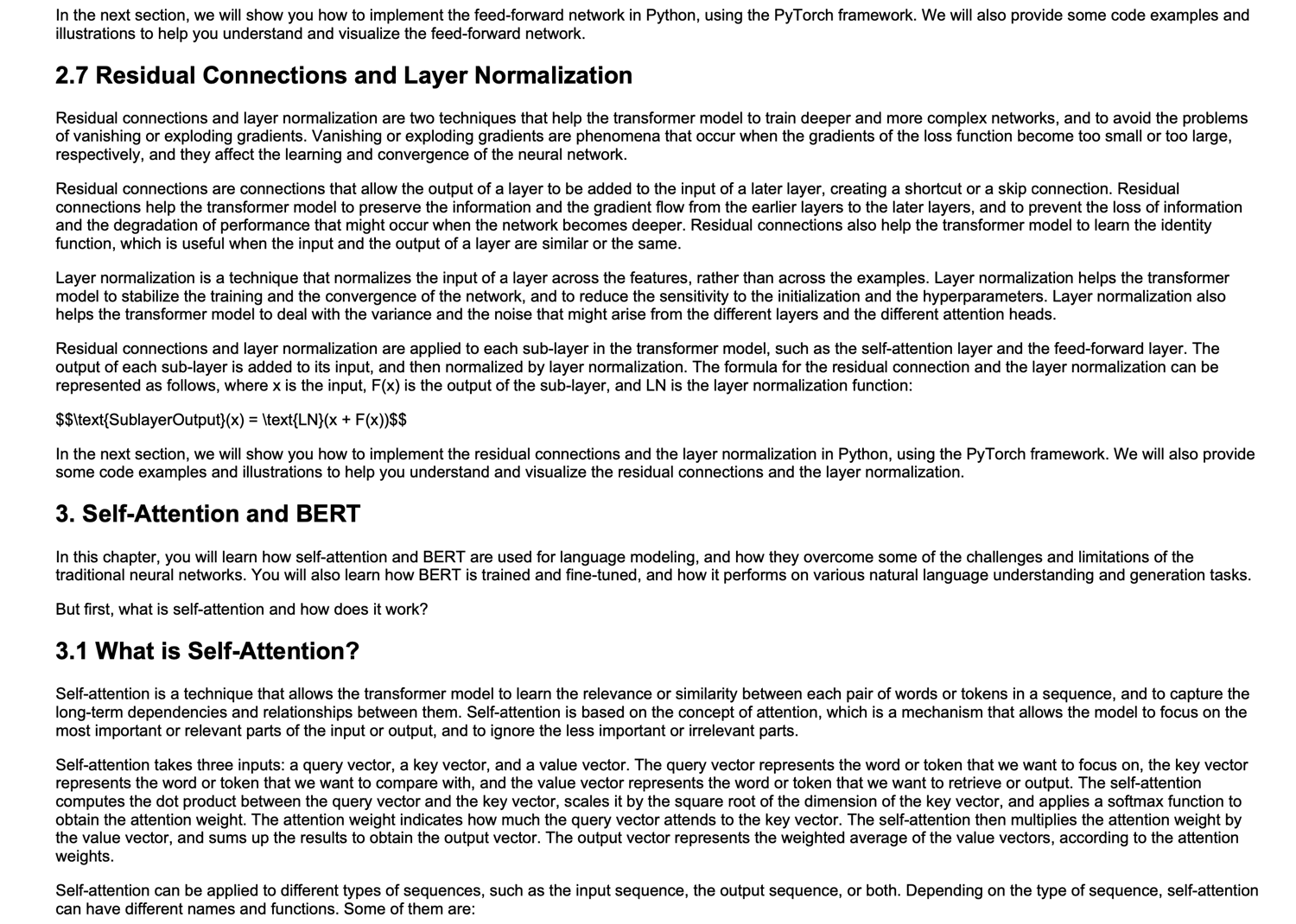

2.7 Residual Connections and Layer Normalization

3. Self-Attention and BERT

3.1 What is Self-Attention?

3.2 How Does Self-Attention Work?

3.3 Scaled Dot-Product Attention

3.4 Masked Self-Attention

3.5 Bidirectional Encoder Representations from Transformers (BERT) 3.6 BERT Architecture

3.7 BERT Pre-Training and Fine-Tuning

4. Applications of BERT

4.1 Natural Language Understanding Tasks 4.2 Natural Language Generation Tasks 4.3 BERT Variants and Extensions

4.4 BERT Limitations and Challenges

5. Conclusion

5.1 Summary of the Book

5.2 Future Directions of Research 5.3 Resources and References